Wait for bash background jobs in script to be finished

There's a bash builtin command for that.

wait [n ...] Wait for each specified process and return its termination sta‐ tus. Each n may be a process ID or a job specification; if a job spec is given, all processes in that job’s pipeline are waited for. If n is not given, all currently active child pro‐ cesses are waited for, and the return status is zero. If n specifies a non-existent process or job, the return status is 127. Otherwise, the return status is the exit status of the last process or job waited for.

Using GNU Parallel will make your script even shorter and possibly more efficient:

parallel 'echo "Processing "{}" ..."; do_something_important {}' ::: apache-*.logThis will run one job per CPU core and continue to do that until all files are processed.

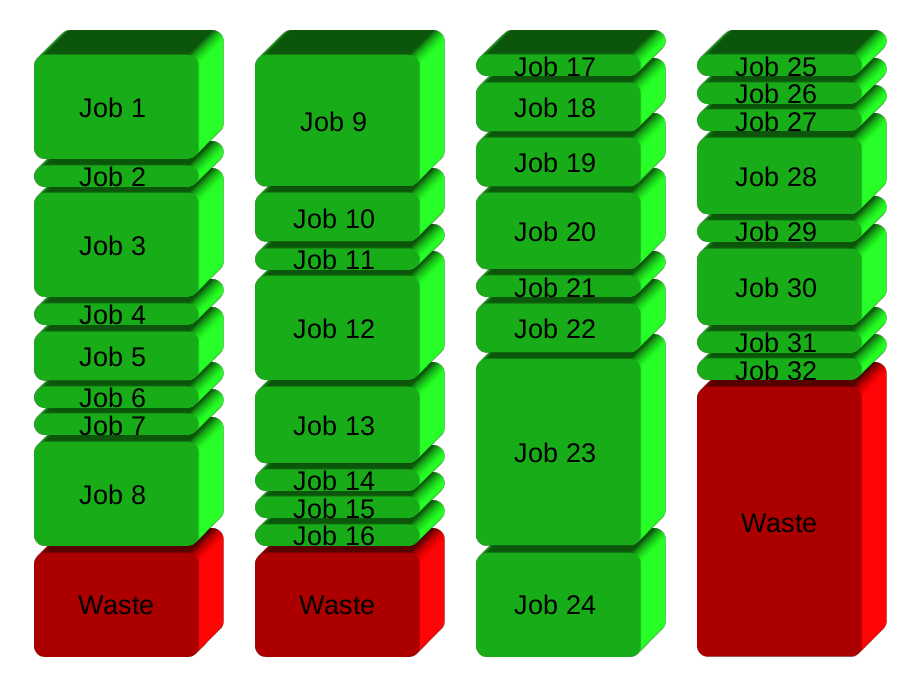

Your solution will basically split the jobs into groups before running. Here 32 jobs in 4 groups:

GNU Parallel instead spawns a new process when one finishes - keeping the CPUs active and thus saving time:

To learn more:

- Watch the intro video for a quick introduction:https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1

- Walk through the tutorial (man parallel_tutorial). You command linewill love you for it.

I had to do this recently and ended up with the following solution:

while true; do wait -n || { code="$?" ([[ $code = "127" ]] && exit 0 || exit "$code") break }done;Here's how it works:

wait -n exits as soon as one of the (potentially many) background jobs exits. It always evaluates to true and the loop goes on until:

- Exit code

127: the last background job successfully exited. Inthat case, we ignore the exit code and exit the sub-shell with code0. - Any of the background job failed. We just exit the sub-shell with that exit code.

With set -e, this will guarantee that the script will terminate early and pass through the exit code of any failed background job.